Motion

Here is some information explaining the specs and formats of the Motion Dataset.

Also check out the benchmarks based on this dataset:

2025 Challenges: Interaction Prediction, Sim Agents, Scenario Generation

Previous challenges can be found here.

Overview

The Motion dataset is provided as sharded TFRecord format files containing protocol buffer data. The data are split into training, test, and validation sets with a split of 70% training, 15% testing and 15% validation data.

The dataset, containing an unlabeled mixture of data collected in both manually-driven and autonomously-driven modes, is composed of 103,354 segments each containing 20 seconds of object tracks at 10Hz and map data for the area covered by the segment. These segments are further broken into 9 second windows (1 second of history and 8 seconds of future data) with varying overlap. The data is provided in two forms. The first form is stored as Scenario protocol buffers. The second form converts the Scenario protos into tf.Example protos containing tensors for use in building models. Details of both formats follow at the end of the page.

Scenario proto | tf.Example | |

|---|---|---|

Segment length | 9 seconds (1 history, 8 future) | 9 seconds (1 history, 8 future) |

Maps | Vector maps | Sampled as points |

Representation | Single proto | Set of tensors |

To enable the motion prediction challenge, the ground truth future data for the test set is hidden from challenge participants. As such, the test sets contain only 1 second of history data. The training and validation sets contain the ground truth future data for use in model development. In addition, the test and validation sets provide a list of up to 8 object tracks in the scene to be predicted. These are selected to include interesting behavior and a balance of object types.

Data Sampling

Each 9 second sequence in either the training or validation set contains 1 second of history data, 1 sample for the current time, and 8 seconds of future data at 10 Hz sampling. This corresponds to 10 history samples, 1 current time sample, and 80 future samples for a total of 91 samples. The test set hides the ground truth future data for a total of 11 samples (10 history and 1 current time sample).

Coordinate frames

All coordinates in the dataset are in a global frame with X as East, Y as North and Z as up. The origin of the coordinate system changes in each scene. The origin is an arbitrary point and may be far from the objects in the scene. All units are in meters.

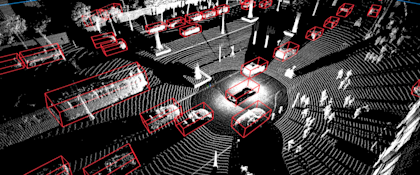

Lidar Data

The 1.2.0 release of the Motion Dataset adds Lidar points for the first 1 second of each of the 9 second windows.

The Lidar data has a compressed format. After decompression, it has the same format as the Perception Dataset lidar data. For more details, please refer to our paper WOMD-LiDAR [1], and the tutorial for decompressing and using the Lidar data in the Motion Dataset.

[1] Kan Chen, Runzhou Ge, Hang Qiu, Rami Al-Rfou, Charles R. Qi, Xuanyu Zhou, Zoey Yang, Scott Ettinger, Pei Sun, Zhaoqi Leng, Mustafa Baniodeh, Ivan Bogun, Weiyue Wang, Mingxing Tan, Dragomir Anguelov. "Womd-lidar: Raw sensor dataset benchmark for motion forecasting." Proceedings of ICRA 2024.

Camera embeddings

The 1.2.1 WOMD release now provides camera data, including front, front-left, front-right, side-left, side-right, rear-left, rear-right, and rear sensors. Similar to the Lidar data, the camera data of the training, validation and testing sets cover the first 1 second of each of the 9 second windows. Instead of releasing raw camera images, we release the image tokens and image embedding extracted from a pre-trained VQ-GAN model. For more details, please refer to our paper MoST [1], and the updated tutorial on github.

[1] Norman Mu, Jingwei Ji, Zhenpei Yang, Nate Harada, Haotian Tang, Kan Chen, Charles R. Qi, Runzhou Ge, Kratarth Goel, Zoey Yang, Scott Ettinger, Rami Al-Rfou, Dragomir Anguelov, Yin Zhou. "MoST: Multi-modality Scene Tokenization for Motion Prediction." Proceedings of CVPR 2024.

Scenario Proto format

Below is an overview of the Scenario protocol buffer format. Please see the Scenario proto definition for full details.

The scenario proto contains a set of object tracks each containing an object state for each time step in the scenario. It also contains static map features and a set of dynamic map features (e.g. traffic signals) for each time step.

Here is an outline of the proto fields:

Scenario

scenario_id - A unique string identifier for this scenario.

timestamps_seconds - Repeated field containing timestamps for each step in the Scenario starting at zero.

tracks - Repeated field containing tracks for each object.

id - A unique numeric ID for each object.

object_type - The type of object for this track (vehicle, pedestrian, or cyclist).

states - Repeated field containing the state of the object for each time step containing its 3D position, velocity, heading, dimensions, and a valid flag. This field corresponds to the top level

timestamps_secondsfield such thattracks[i].states[j]indexes the ith agent's state at timetimestamps_seconds[j]

dynamic_map_states - Repeated field containing traffic signal states across time steps such that

dynamic_map_states[i]occurs attimestamps_seconds[i]lane_states - Repeated field containing the set of traffic signal states and the IDs of lanes they control (indexes into the map_features field) for a given time step.

map_features - Repeated field containing the set of map data for the scenario. This includes lane centers, lane boundaries, road boundaries, crosswalks, speed bumps, and stop signs. Map features are defined as 3D polylines or polygons. See the map proto definitions for full details.

sdc_track_index - The track index of the autonomous vehicle in the scene.

objects_of_interest - Repeated field containing indices into the tracks field of objects determined to have behavior that may be useful for research training.

tracks_to_predict - Repeated field containing a set of indices into the tracks field indicating which objects must be predicted. This field is provided in the training and validation sets only. These are selected to include interesting behavior and a balance of object types.

current_time_index - The index into timestamps_seconds for the current time. All steps before this index are history data and all steps after this index are future data. Predictions are to be made at the current time.

compressed_frame_laser_data - Repeated fields containing per time step Lidar data. This contains lidar data up to the current time step (first 1 second) for each segment. Note that this field is only populated in the lidar data split of the Motion Dataset.

For any time step where a state’s valid bit is set to false, there is no measurement of the object state provided in this dataset. Also be aware that there are objects included which have no valid states in the 1 second past history but only have valid states in the future time steps. While these cannot be used for motion prediction, they are included in the dataset for visualization purposes or for research in predicting unseen objects in the future.

tf.Example Proto format

Each tf.Example proto contains the same information as the Scenario protos described above, but all data has been converted to tensors. Please see the tf.Example proto definition for full details.

Tutorials

If you would prefer to jump right in, check out the tutorials here. The Github repo also includes a Quick Start with installation instructions for the Waymo Open Dataset supporting code.

Map updates

The v1.2.0 release of the Motion Dataset adds entrances to driveways. Driveway features provide map polygons for entrances to parking lots and other exits from roadways.